Forms created on Formcrafts are shared using a unique link, like app.formcrafts.com/my-form. Embedded forms use the same URL. Optimizing the speed of our form page is a huge priority since it makes up over 95% of our page requests.

We measure it using LCP (Largest Contentful Paint) time.

How long does a user wait between initiating a request and seeing a fully rendered form?

We were able to bring down the Largest Contentful Paint time of a form page down to almost 200ms (P50 value of 217ms, as detailed in our notes below). To give you some perspective here is a contact form in Formcrafts vs the same form recreated in Typeform:

We replicated these results with PageSpeed and Debugbear here. Note that our results include the time spent on initial connection and SSL handshake. Repeat visits to your forms are much faster - clocking in around 120ms.

Our forms are Single-Page Apps with a lot of features like conditional logic, real-time math calculations, network lookups, animations, multi-step layout, 19 field types, basic access control, etc ... A good part of that is included in the initial load.

Here is what we learnt in the last year-and-half:

Framework selection

The previous version of Formcrafts was built in Angular 1 and React. When we decided to rebuild Formcrafts we started with Next.js. After a few weeks we realized that we missed the dev experience of Angular 1, and React had a lot of the same frustrations as before.

We stumbled upon SvelteKit, and after a few day of experimenting we realized that it was a much better fit for us.

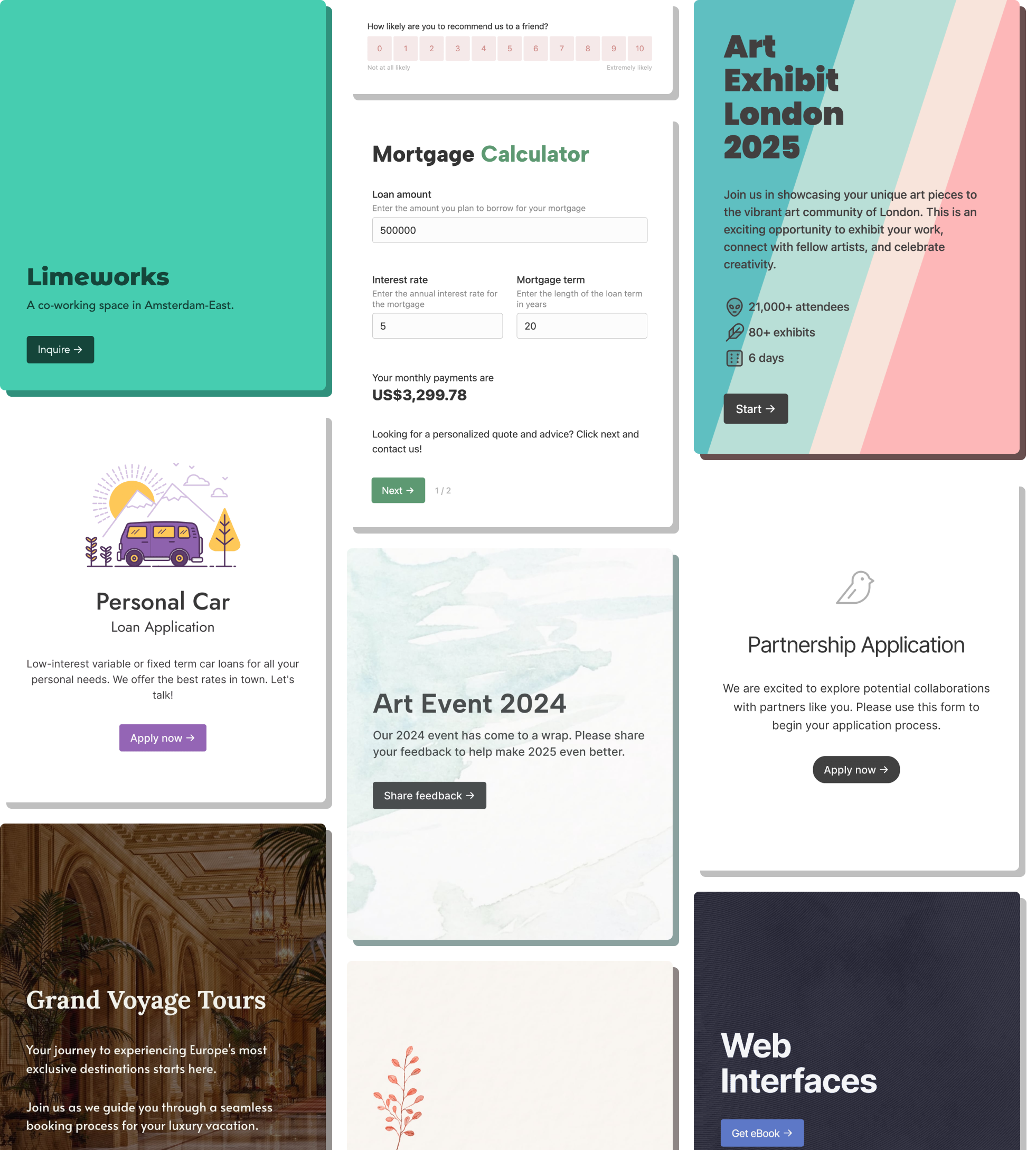

Here is a section of the CWV report from The HTTP Archive comparing origins with good LCP using Next.js and SvelteKit over the last two years:

For May, 2024, 44% of Next.js origins had a good LCP, vs 54% for SvelteKit.

Even more surprising is the page weight. For May 2024, an average Next.js page clocked in at 865KB vs 362KB for SvelteKit.

Performance aside, Svelte and SvelteKit offer a much better developer experience.

Edge servers

Previously Formcrafts would serve the form page from the same location as our database, which meant high latency for people far away.

We wanted to implement distributed servers and explored a few options:

- Cloudflare Workers + KV: Cloudflare Workers seemed like a good fit. They're well distributed, and we could utilize the KV storage. After a few months we realized that we were spending too much time wrestling with Workers, since the API isn't fully Node compatible. If only there was a technology that allowed us to containerize our application and make it work reliably across platforms /s.

- Page cache: We could ask Cloudflare to simply cache the form page. We realized soon that this wouldn't work for us - the form page is quite dynamic, and the layout can change based on URL parameters. Any changes made to the form component would necessitate clearing site-wide cache. There is also no easy way to purge cache for a group of forms.

- Read-only Postgres replicas: We use Supabase, and they announced read-replicas recently. This felt like an overkill solution since we only needed a small part of our database to render the forms. Additionally, there would always be latency between Supabase and Fly.io since they talk over public servers.

And then we stumbled upon a brand new, cutting-edge technology nobody had heard of before: SQLite /s.

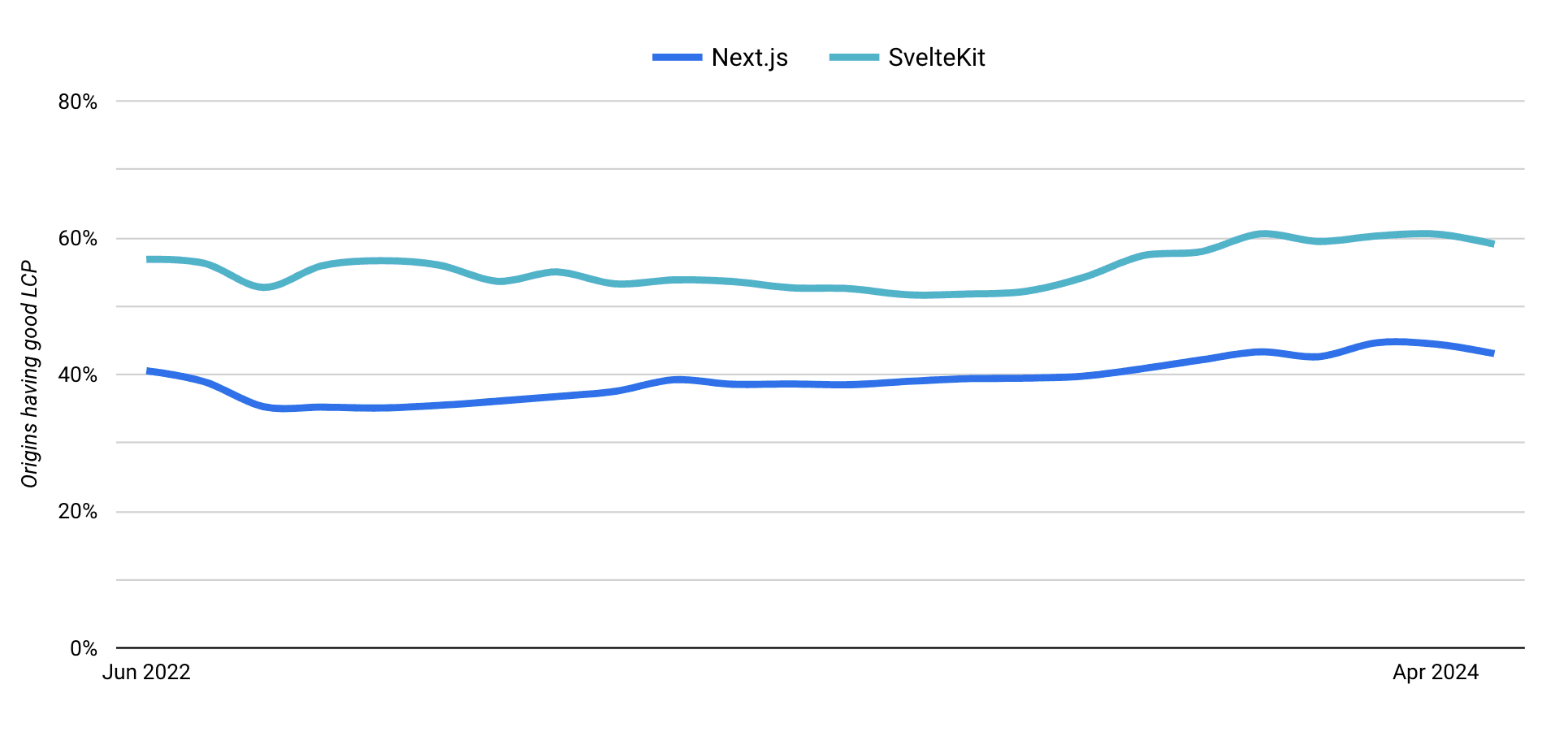

Each instance of our Fly machine runs a copy of SQLite, which contains the data it needs to render a form.

The tricky thing is - those SQLite databases need to be kept in sync. Luckily, there is a solution for that. LiteFS is a distributed file system that transparently replicates SQLite databases. Coincidently, run by the same folks over at Fly.io.

When a form is requested, we first look into the local SQLite database. If the row is not found we call Supabase, while also writing the row to SQLite for future visits.

LiteFS ensures that write requests are forwarded to the correct SQLite node (since most are read-only), and all SQLite databases are in sync.

This may have been the biggest differentiator for us so far.

Preload or old school?

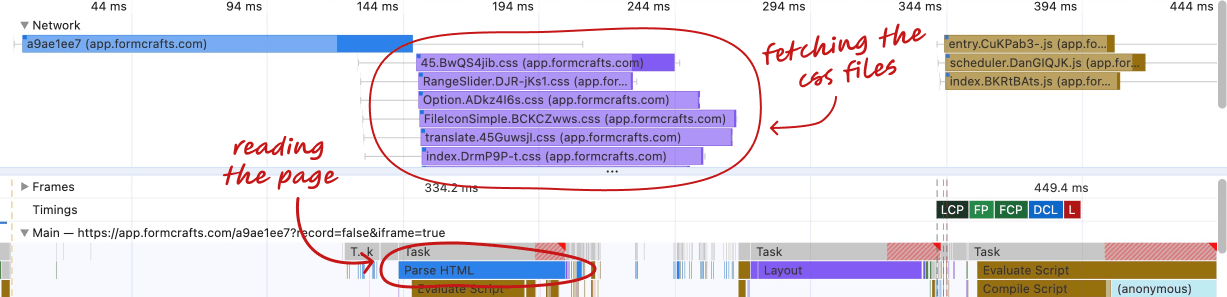

Here is how a form page request goes:

- The browser requests a form with the key abcd.

- Our server finds the form in the database, prepares the form page HTML, and sends it to the browser.

- The browser reads the first few lines and realizes it needs some CSS files to display the page. It requests these files from the server.

- While those files are being fetched, the browser, in parallel, parses the HTML.

- Once the files are received, and the browser is done with the HTML, it begins rendering the page.

Here is a waterfall of a form request with some throttling:

The problem is - the browser has to make a round trip to have enough information to display the page. In general, it also takes more time to fetch the CSS files than it does to parse the HTML, which keeps the browser waiting.

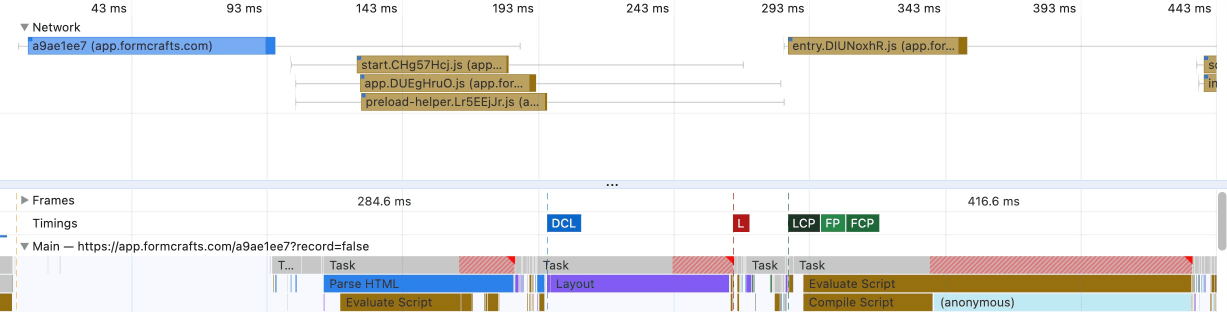

What if we went old school and inlined all the CSS?

This also made a noticeable improvement on the LCP.

Inlining CSS isn't always a good idea since the browser can't cache this CSS to be used on other pages, and it increases the HTML size. For the form page, neither of those were an issue. Even with inlined CSS our HTML is under 20kB.

The impact of this is more prominent for slower devices, and particularly over patchy connections.

Code splitting and shared modules

Imagine you are a handyman with a giant collection of tools. Figuring out the tools you need for each job and carrying them separately is not efficient. Carrying all the tools at every job is also inefficient.

So you create toolboxes. Each toolbox contains a different set of tools. For each job you grab the toolboxes you need.

If tools are like your functions, these toolboxes are like chunks. For a Single-Page Application (SPA) this makes things very efficient. You can add functions to your library, import them at will, and things like Rollup will work their magic behind the scenes and create efficient chunks. Each route will only request the chunks it needs.

Now here's a problem. What if 95% of your time is spent on a specific type of job. The toolbox you need for this job might also contains tools you don't need. It makes sense to have a toolbox specifically for that job, and then separate sets for other jobs.

SvelteKit's defaults work well for most use-cases. However, it doesn't know your specific needs. It's your job to know that.

Here's a real example. Our form-functions.ts file has this line:

import { a } from "./other-functions.ts";Now a() is a small function, with just 12 lines. However, adding this would increase the bundle size for the form page by 30KB, since it's creating a shared chunk which could also be used on other routes.

Frameworks and bundlers abstract away the complexity of modern tooling, hiding structural problems.

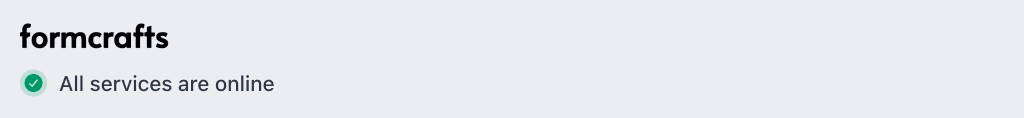

Another one. There is a harmless-looking uptime indicator on our website footer, which loads in an iFrame.

That tiny green checkbox and "All services are online" loads 28KB of gzipped CSS (more than twice the amount we need to render all our forms).

There is a developer out there who can move a few lines around and reduce their bundle size by 99%. Just saying.

Components for everything?

Coming from Angular 1, working with components was a breath of fresh air.

It seems like a common practise to use components for everything, including small SVG icons.

Take this envelope icon component for example:

<script>

export let size = "1rem";

export let color = "currentColor";

export let strokeWidth = "1.6";

</script>

<svg

xmlns="http://www.w3.org/2000/svg"

fill="none"

width="{size}"

height="{size}"

{...$$restProps}

class="{$$props.class || ''} focus:outline-none"

viewBox="0 0 24 24"

stroke-width="{strokeWidth}"

stroke="{color}">

<path

stroke-linecap="round"

stroke-linejoin="round"

d="M21.75 6.75v10.5a2.25 2.25 0 01-2.25 2.25h-15a2.25 2.25 0 01-2.25-2.25V6.75m19.5 0A2.25 2.25 0 0019.5 4.5h-15a2.25 2.25 0 00-2.25 2.25m19.5 0v.243a2.25 2.25 0 01-1.07 1.916l-7.5 4.615a2.25 2.25 0 01-2.36 0L3.32 8.91a2.25 2.25 0 01-1.07-1.916V6.75"></path>

</svg>Instead of this component, you could also simply export a string. Maybe your icon doesn't need a state:

export const getIcons = () => {

return {

"envelope": `<svg

xmlns="http://www.w3.org/2000/svg"

fill="none"

width="32"

height="32"

class="focus:outline-none"

viewBox="0 0 24 24"

stroke-width="1.5"

stroke="currentColor">

<path

stroke-linecap="round"

stroke-linejoin="round"

d="M21.75 6.75v10.5a2.25 2.25 0 01-2.25 2.25h-15a2.25 2.25 0 01-2.25-2.25V6.75m19.5 0A2.25 2.25 0 0019.5 4.5h-15a2.25 2.25 0 00-2.25 2.25m19.5 0v.243a2.25 2.25 0 01-1.07 1.916l-7.5 4.615a2.25 2.25 0 01-2.36 0L3.32 8.91a2.25 2.25 0 01-1.07-1.916V6.75"></path>

</svg>`

}

}If you replaced 20 such icon components with string functions you could reduce your bundle size by 30KB.

Most icon components are more complex than this, and whittle down to 2 lines of SVG in the end, while still sending the compiled component to the browser.

Admittedly, minification and gzip make things like this hurt less for the end-user. Partial hydration is another interesting way to solve this problem.

Closing notes

Looking back at this post I realize that most of the things we did boil down to:

- Don't pile on stuff

- Understand the technologies you are using

- Often, old school is faster

Notes about the test:

1. We try to measure load time as unique visitors would experience, which would include initial connection time, SSL handshake, and no caching. After each test we killed active connections and cleared host cache in the browser.

2. Here is the Formcrafts form and here is the Typeform form we used for testing.

3. Results:

| Page | 50th Percentile (P50) | 90th Percentile (P90) |

|---|---|---|

| Formcrafts | 217ms | 295ms |

| Typeform | 2940ms | 2990ms |

4. Formcrafts' nearest server is in Virginia, and the test was done from Montreal.

5. The device is M2 Max with a network speed over 200 Mbps.